This article is a summary of our publications and presentations, describing how we implemented artificial intelligence solutions in modern automation teams. The solution we present here is developed and tested by KBA AUTOMATIC Sp. z o.o., which is a milestone towards Industry 4.0. In this solution, we combine Industrial Automation with the latest developments in computer science and artificial intelligence.

Our solution includes:

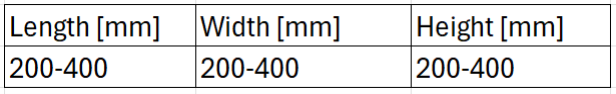

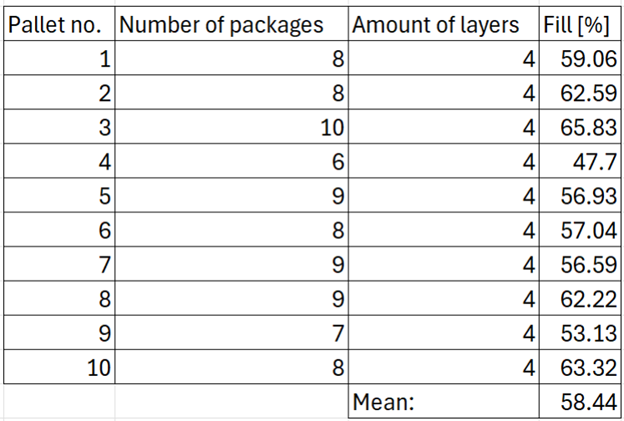

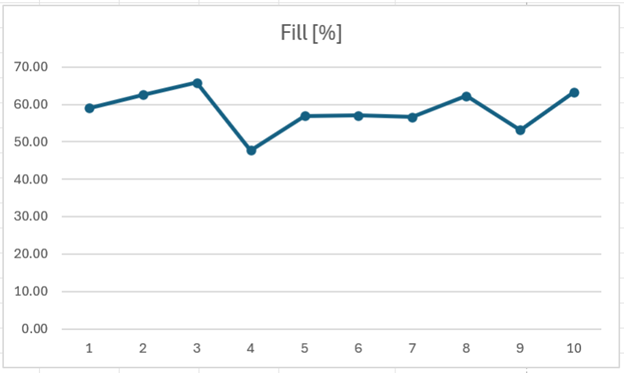

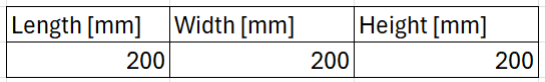

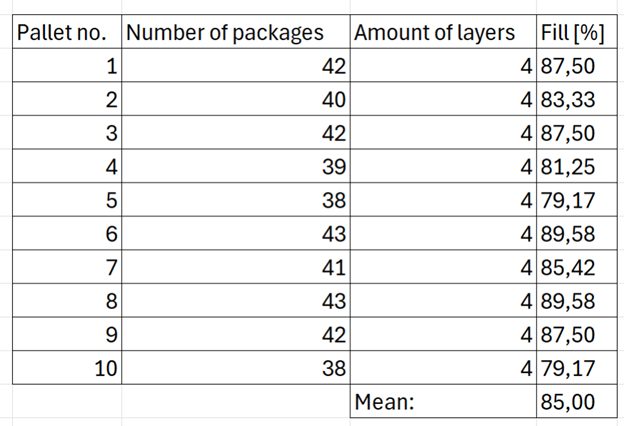

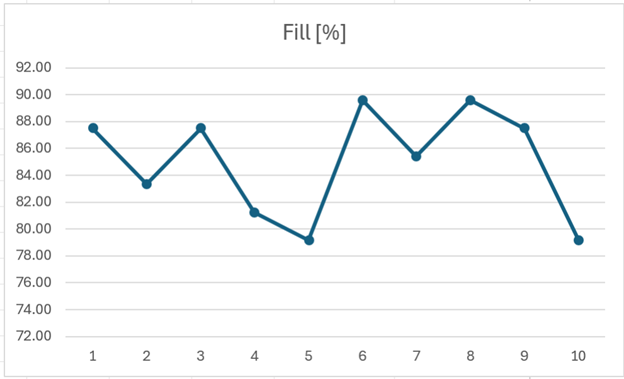

Our work has led to the development of both software and hardware for implementing an intelligent method of palletizing heterogeneous physical objects. The interdisciplinary approach used in creating our solution allowed us to combine elements from computer science, artificial intelligence, industrial automation, and robotics. The verification studies we conducted confirmed the effectiveness of the developed system. Depending on the size of the objects used, the average efficiency ranged from 40% to 85% pallet fill.

In our application, we used three learning methods:

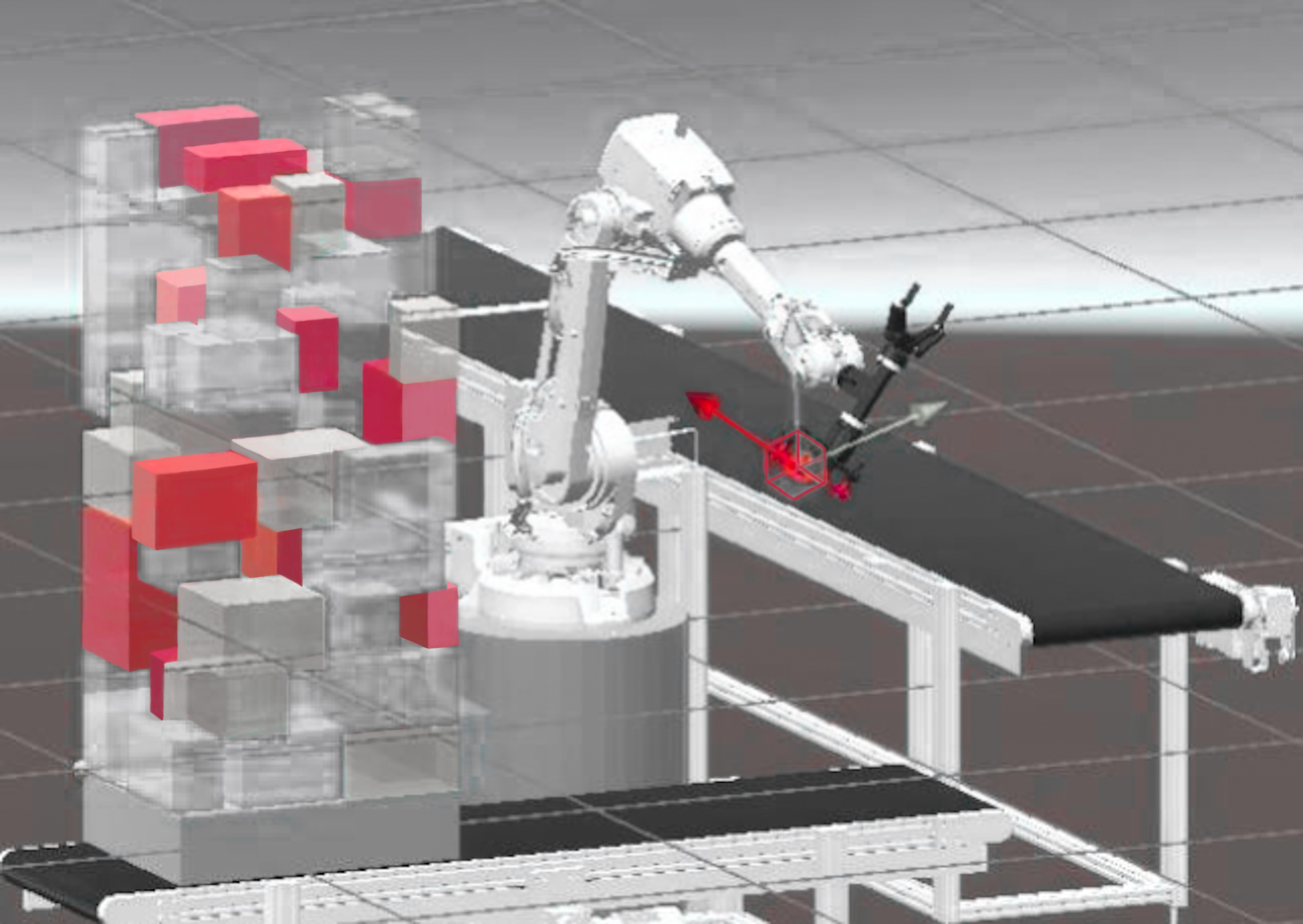

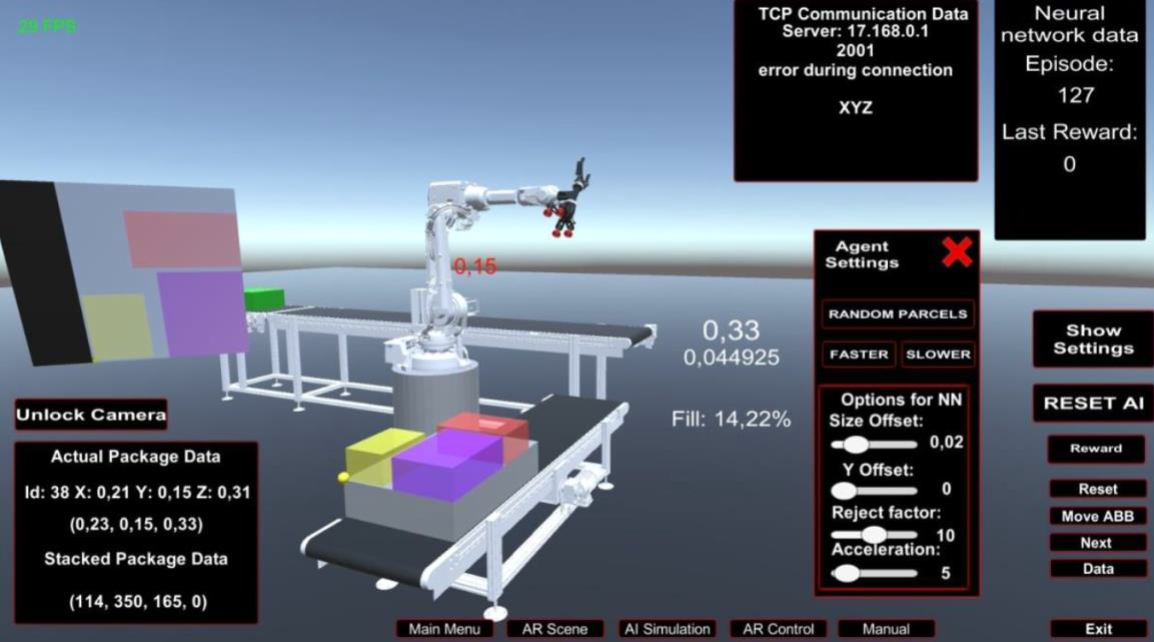

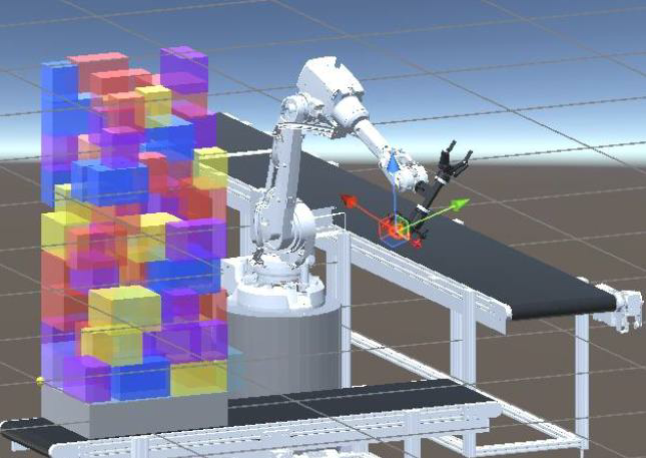

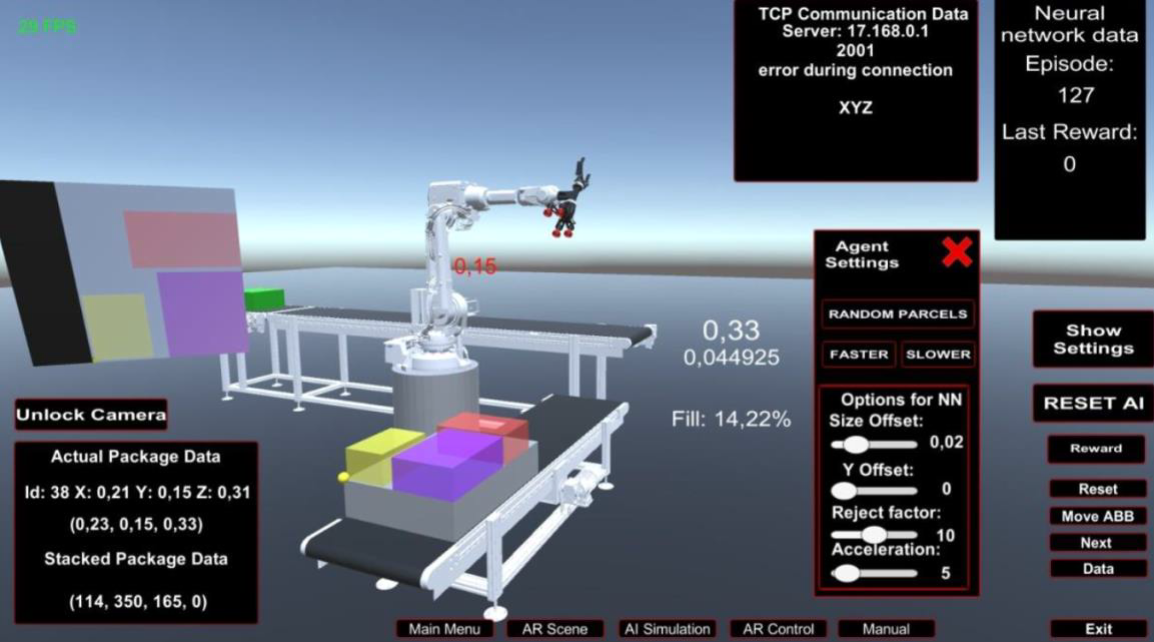

We developed a custom application implementing the trained neural network based on the Unity graphic engine (v2020.3.4f1). Our solution allows for the generation of advanced simulations of physical processes and real-time simulations.

For most of the application scripts, we used C# language using the Visual Studio. To avoid conflicts between different software versions, we created virtual Python environments (Venv), making it easy to change the configuration of the libraries used, and as a result two separate virtual environments were created.

The following libraries were used in the application:

Implementing the system on a physical object aligns with the “digital twin” model paradigm.

After development phase, verification tests were conducted to ensure the proper functionality of the system. The tests were carried out in two ways:

1. A neural network trained on packages of random sizes, with verification of system performance using packages of random dimensions

2. A neural network trained on packages of the same size, with verification of system performance using packages of the same size

In order to fully leverage the potential of artificial intelligence in our system, we developed an intelligent vision system for detecting and classifying objects moving on a conveyor belt.

This system is an integral part of our Intelligent Robotic Cell 4.0, providing a leap forward in automation technology.

Our technology is characterized by:

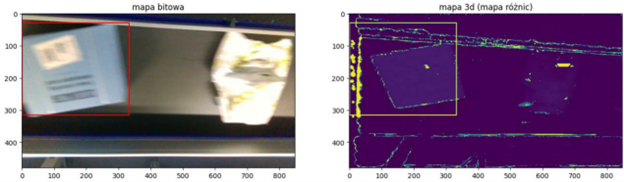

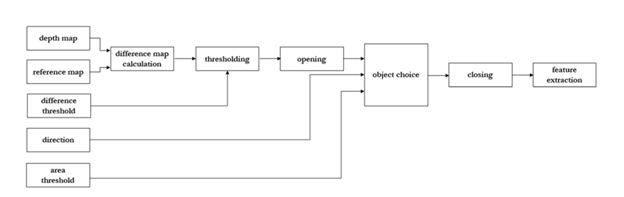

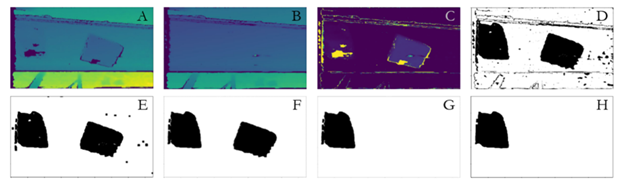

Our approach uses a depth camera that captures images of objects in three dimensions. The background is then extracted from the obtained image, which is crucial for further feature extraction (dimensions, shapes, and texture). It is worth noting that the efficiency of this solution is not dependent on lighting, making it highly adaptable for various environmental conditions.

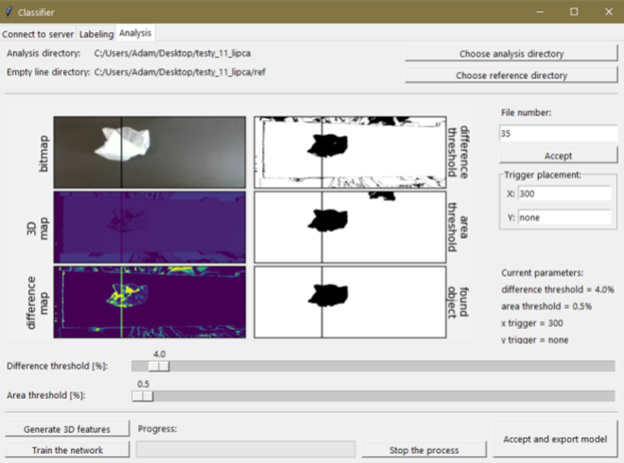

The extracted features are input into our neural network, designed to classify objects on the conveyor belt. The system includes Python-based software and a GUI, streamlining data acquisition, neural network training, and object classification.

Our approach uses a depth camera to capture images of objects in three dimensions. It should be noted that the depth camera has a time difference between capturing the bitmap image and the depth map. For moving objects, such as those on a conveyor belt, this time difference causes a phase shift between the objects seen in the bitmap image and the depth map. As a result, the bitmap map is not used for depth descriptor extraction, and it is only shown for visualization purposes.

In this solution, we distinguish two layers. The first layer is responsible for data acquisition and analysis from the depth camera images, and the second layer is our neural network used for object classification.

To better understand our technology, we include the analysis of the depth map as a block diagram and the system’s visualization.

Extracted Descriptors

After determining the object to be analyzed, we extract 29 descriptors. The first ten are responsible for the statistical parameters of the depth map containing only the object, and the remaining 19 describe the shape of the analyzed object.

Classification Model Description

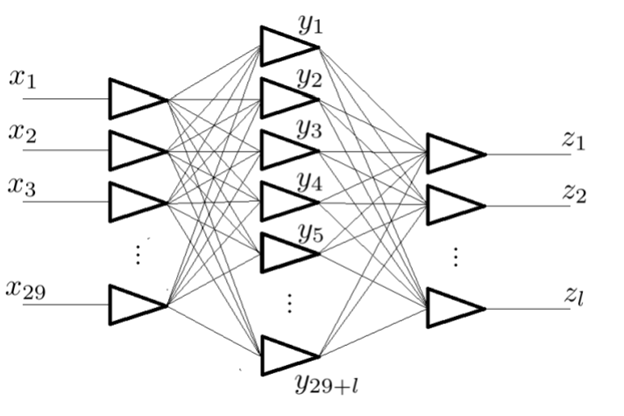

After extracting the descriptors, we normalize them to enhance the model’s learning capabilities and improve prediction accuracy. This allows us to input the values into the model, which creates a three-layer artificial neural network. The dimensions of the layers are determined by the number of features and classes. For the input layer, the size is equal to the number of features, and for the hidden layer, the size is determined by the sum of features and classes.

Anomaly Detection Algorithm Description

In addition to the aforementioned algorithms, we also developed an anomaly detection algorithm. The use of this algorithm increases classification efficiency. The anomaly detection algorithm identifies features that do not belong to the class of the object being checked, without disrupting the primary classification process.

The classification result and the anomaly detection algorithm are returned as a percentage probability.

Classified Objects

Our vision system can classify various objects based on their characteristics. We do not impose any fixed number of classes, however, our application is aimed at sorting diverse objects. Therefore, the objects typically classified are cardboard and bagged packages.

To verify the correctness of the developed system, we implemented and tested it physically — initially statically, and later dynamically (with a package speed of 0.95 m/s). The training set consisted of 200 images, and the test set included 300 images.

The GUI designed for our system allowed efficient testing of our model and verification of the approach in the environment.

The strengths of our solution include:

The limitations include:

The solutions we have developed undoubtedly create a symbiosis that represents a higher level of automation. The combination of modern solutions — intelligent vision systems and automated palletizing using an industrial robot — emphasizes quality, efficiency, precision, and innovation. Our system is universal, easily adaptable, and capable of working in various environmental conditions. These features make it compliant with modern standards and enable cost reduction in case of system modifications.

In the next section, we provided a collection of conferences, where we presented our solution.